Azure OpenAI with LibreChat for Private and Cost-Effective AI Chatbots

AI chatbots are now essential for businesses, developers, and tech enthusiasts. While OpenAI’s ChatGPT is a powerful hosted solution, it has drawbacks such as ongoing subscription costs, privacy concerns, and limited customization options. What if you could run a powerful AI chatbot on your own infrastructure while leveraging the scalability and reliability of cloud-hosted large language models (LLMs)?

This is where LibreChat comes in. LibreChat is an open-source, self-hosted chatbot interface that provides a familiar ChatGPT-style UI while allowing you to connect to various AI models, including OpenAI's API, Azure OpenAI, and even local LLMs. By integrating LibreChat with Azure OpenAI, you get the best of both worlds—a customizable, private chatbot without the need to invest in expensive local hardware.

Why Use LibreChat with Azure OpenAI instead of OpenAI's Hosted ChatGPT?

While OpenAI’s hosted ChatGPT is a convenient solution, it comes with trade-offs in terms of cost, privacy, and customization. By using LibreChat with Azure OpenAI, you can maintain full control over your AI environment while leveraging powerful cloud-based models. Here’s why this approach is worth considering:

| Benefit | Description |

|---|---|

| Privacy & Data Control | Your AI interactions stay within your own Azure subscription, creating a walled-garden setup for sensitive use cases. |

| Cost Efficiency | Instead of paying for OpenAI’s subscription model, you only pay for the API usage, which can be more economical. |

| No Expensive Hardware Needed | Unlike self-hosting large models like LLaMA or Mistral, this eliminates the need for high-end GPUs, making AI more accessible. |

| Customization & Flexibility | LibreChat allows you to tweak UI elements, integrate with other APIs, and modify its behavior to suit your specific needs. |

What This Blog Covers

In this guide, we’ll walk through how to install and configure LibreChat using Docker and set it up to connect with Azure OpenAI as the backend. We’ll cover:

- What LibreChat is and its benefits

- Why using Azure OpenAI with LibreChat makes sense

- Step-by-step installation and configuration of LibreChat

- How to connect LibreChat to Azure OpenAI

- Potential limitations and considerations

By the end of this tutorial, we’ll have a fully operational AI chatbot running in your own environment, leveraging the power of cloud-based LLMs while keeping costs and privacy concerns in check.

What is LibreChat?

LibreChat is an open-source, self-hosted chatbot interface that provides a familiar ChatGPT-style experience while giving users full control over their AI interactions. It acts as a privacy-friendly alternative to OpenAI’s hosted ChatGPT, enabling users to connect to multiple backends, including Azure OpenAI, OpenAI API, and local models.

Unlike OpenAI’s web UI, LibreChat is highly customizable, supports multi-user authentication, and allows integration with various AI models—all while keeping conversations within your own infrastructure.

How Does LibreChat Compare to ChatGPT’s Web UI?

| Feature | LibreChat | OpenAI ChatGPT |

|---|---|---|

| Self-Hosted | ✅ Yes (Run on your own infrastructure) | ❌ No (Cloud-based only) |

| Supports Azure OpenAI | ✅ Yes | ❌ No |

| Multiple AI Model Support | ✅ Yes (Azure OpenAI, OpenAI API, Local LLMs) | ❌ No (Limited to OpenAI’s models) |

| Customizable UI | ✅ Yes (Themes, layout, chatbot behavior) | ❌ No |

| Multi-User Support | ✅ Yes (OAuth, API keys, local authentication) | ❌ No (Single-user only per account) |

| Memory & Chat History | ✅ Yes (Local & cloud-based memory options) | ✅ Yes (Limited to OpenAI's platform) |

| Plugin & API Integration | ✅ Yes (Extend functionality with plugins) | ❌ No |

| Streaming Responses | ✅ Yes (Real-time AI interactions) | ✅ Yes |

| Privacy & Data Control | ✅ Yes (All data stays within your infrastructure) | ❌ No (Data stored and processed by OpenAI) |

| Cost Efficiency | ✅ Yes (Pay only for API usage) | ❌ Requires Plus/Enterprise plan for GPT-4 access |

LibreChat is a powerful, flexible, and private AI chatbot interface, perfect for those who want to self-host AI solutions while leveraging the scale of cloud-based LLMs like Azure OpenAI.

💡 Tip: More information about LibreChat’s features can be found in the official documentation: LibreChat - Features.

Prerequisites

Before setting up LibreChat with Azure OpenAI, make sure you have the following in place:

-

Azure OpenAI Service

- You need an Azure OpenAI instance set up and configured.

- If you haven’t done this yet, check out my previous blog post: 🔗 Deploying Azure OpenAI with Bicep

-

Docker Installed

- LibreChat runs inside a Docker container, so you’ll need Docker installed on your local machine.

- If you don’t have it yet, download and install it from the 🔗 Docker website.

-

Basic Understanding of API Keys

- LibreChat requires an API key to connect to Azure OpenAI.

- You should know how to generate and manage API keys in your Azure OpenAI account.

- You’ll retrieve this key from the Azure portal during the setup process.

Once these prerequisites are in place, you’ll be ready to install LibreChat and connect it to Azure OpenAI.

Step-by-Step Guide to Installing and Configuring LibreChat

With the prerequisites in place, it's time to install and configure LibreChat. LibreChat runs inside a Docker container, making deployment simple and efficient. In this section, we’ll walk through the process of setting up LibreChat locally and preparing it to integrate with Azure OpenAI.

Pulling the LibreChat Git Repository

First, we need to clone the LibreChat repository from GitHub and navigate into its directory.

# Pull the Librechat Git Repository

git clone https://github.com/danny-avila/LibreChat.git

# Navigate to the LibreChat Directory

cd LibreChat

Copying Configuration Files

LibreChat includes example configuration files that we need to copy and modify to match our setup. Run the following commands to create default configuration files.

# Create a env file

cp .env.example .env

# Create a Docker Compose Override File

cp docker-compose.override.yml.example docker-compose.override.yml

# Create a LibreChat yaml file

cp librechat.example.yaml librechat.yaml

Configuring the .env file

LibreChat allows multiple AI backends, so we need to set the ENDPOINTS field in the .env file to azureOpenAI to ensure it uses Azure OpenAI as the AI backend.

#===================================================#

# Endpoints #

#===================================================#

ENDPOINTS=azureOpenAI

It should look something like this.

LibreChat .env Configuration |

Configuring API Keys

Azure OpenAI requires API keys for authentication. We’ll securely set these keys as environment variables so Docker can reference them during execution.

echo 'export AZURE_OPEN_AI_KEY_AE="apiKeyGoesHere"' >> ~/.bashrc

echo 'export AZURE_OPEN_AI_KEY_EUS2="apiKeyGoesHere"' >> ~/.bashrc

echo 'export AZURE_OPEN_AI_KEY_SWEC="apiKeyGoesHere"' >> ~/.bashrc

source ~/.bashrc

echo $AZURE_OPEN_AI_KEY_AE

echo $AZURE_OPEN_AI_KEY_EUS2

echo $AZURE_OPEN_AI_KEY_SWEC

Configure docker-compose.override.yml file

Next, we need to update the Docker Compose override file to include the environment variables and enable debug logging. This will ensure that the API keys are correctly passed to LibreChat.

services:

api:

environment:

- AZURE_OPEN_AI_KEY_AE=${AZURE_OPEN_AI_KEY_AE}

- AZURE_OPEN_AI_KEY_EUS2=${AZURE_OPEN_AI_KEY_EUS2}

- AZURE_OPEN_AI_KEY_SWEC=${AZURE_OPEN_AI_KEY_SWEC}

- DEBUG_LOGGING=true

- DEBUG_CONSOLE=true

volumes:

- type: bind

source: ./librechat.yaml

target: /app/librechat.yaml

image: ghcr.io/danny-avila/librechat:latest

Configure librechat.yml

LibreChat uses a librechat.yaml file for backend configuration. We need to update this file to define the Azure OpenAI endpoints and model settings.

Below is an example configuration for Azure OpenAI. You can adjust it based on your region, deployment name, and model versions.

endpoints:

azureOpenAI:

# Endpoint-level configuration

titleModel: "gpt-4o"

plugins: false

assistants: false

groups:

- group: "Azure Open AI - East US 2 - Gpt-4o Models"

apiKey: "${AZURE_OPEN_AI_KEY_EUS2}"

serverless: true

baseURL: "https://eastus2.api.cognitive.microsoft.com/openai/deployments/gpt-4o/"

version: "2024-08-01-preview"

models:

gpt-4o:

deploymentName: "gpt-4o"

version: "2024-11-20"

💡 Tip: More information about AzureOpenAI configuration for LibreChat can be found here: - 🔗 Azure OpenAI Configuration.

Running LibreChat

Once everything is configured, start the LibreChat container using Docker.

docker compose up -d

After launching, you can access LibreChat by navigating to 🔗 http://localhost:3080.

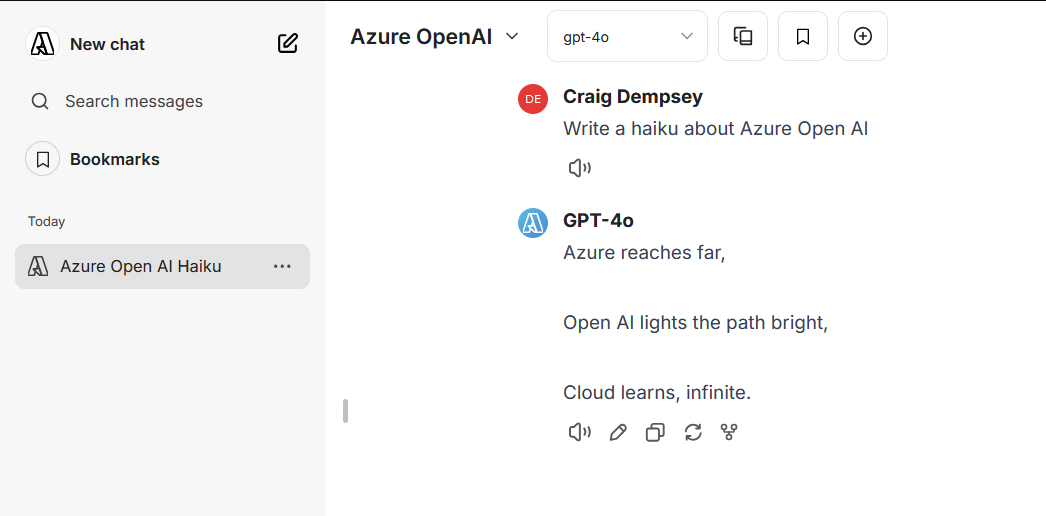

|

|---|

| LibreChat working with Azure OpenAI |

Potential Limitations & Considerations

While using LibreChat with Azure OpenAI provides privacy, cost efficiency, and customization benefits, there are some important limitations and considerations to be aware of before deploying it in a production environment.

Rate Limits and Pricing Considerations

Azure OpenAI operates on a pay-as-you-go model, meaning costs are determined by usage. Key factors to consider:

- Token Limits & Pricing – Azure OpenAI charges per 1,000 tokens, and pricing varies depending on the model (e.g., GPT-4o vs. GPT-4-Turbo).

- Rate Limits – There are API request limits per minute and per day, depending on your Azure quota. Exceeding these limits can result in delayed responses or temporary blocks.

- Region Availability – Not all models and features are available in every Azure region. You may need to deploy resources in multiple regions for redundancy.

💡 Tip: Keep an eye on your Azure cost monitoring dashboard and configure budget alerts to avoid unexpected expenses.

LibreChat Features That May Not Work Out-of-the-Box

LibreChat is a powerful self-hosted solution, but some features require additional setup or are not fully compatible with Azure OpenAI:

- Multi-Model Switching – LibreChat supports switching between different models dynamically, but Azure OpenAI does not offer all OpenAI models (e.g., GPT-3.5 and GPT-4o have different capabilities).

- Persistent Memory & Assistants – LibreChat includes features for persistent AI memory and OpenAI Assistants, but Azure OpenAI may require external storage solutions for maintaining long-term memory.

- Rate Limits & Throttling – Unlike OpenAI’s hosted ChatGPT, where these limits are abstracted away, Azure enforces strict quotas, which may require optimizing your API usage strategy.

💡 Tip: Check the LibreChat documentation and Azure OpenAI API docs to configure these features properly.

Alternative Self-Hosted Frontends for Azure OpenAI

LibreChat is not the only option for self-hosting an AI chatbot with Azure OpenAI. If you need different features or UI styles, consider these alternatives:

| Alternative | Description | Key Differences |

|---|---|---|

| Chatbot UI | A lightweight self-hosted frontend for OpenAI and Azure OpenAI APIs. | Minimalistic UI, focused on performance. |

| Open WebUI | Feature-rich chatbot frontend with local LLM support. | Supports both cloud and local AI models. |

| FlowiseAI | A no-code platform for building AI chatbots and workflows. | Drag-and-drop workflow builder. |

💡 Tip: If LibreChat doesn't fit your needs, explore these alternatives to find the best match for your use case.

Final Thoughts

By integrating LibreChat with Azure OpenAI, you get the best of both worlds—a customizable, private AI chatbot without the need for expensive local hardware. This setup allows you to maintain full control over your AI environment, optimizing for privacy, cost efficiency, and flexibility while still leveraging the power of cloud-hosted LLMs.

Key Takeaways

- Privacy & Security – Your AI interactions stay within your Azure subscription, avoiding the privacy concerns of public AI services.

- Cost-Effective AI Hosting – You only pay for API usage, which can be cheaper than OpenAI’s subscription models.

- Self-Hosted, No Expensive Hardware – No need for powerful local GPUs—Azure OpenAI handles the heavy lifting.

- Customizable Chat Experience – LibreChat allows you to modify UI, authentication, and backend configurations to suit your needs.

- Scalability & Extensibility – You can expand LibreChat’s capabilities using plugins, API integrations, or alternative frontends if needed.

While LibreChat is a powerful solution, it’s important to be aware of potential limitations, such as Azure OpenAI’s rate limits, model availability, and feature compatibility. If LibreChat doesn’t fully meet your requirements, alternative self-hosted frontends like Chatbot UI, Open WebUI, or FlowiseAI may be worth exploring.

Next Steps

Now that you have LibreChat running with Azure OpenAI, here are some ideas for what you can do next:

- Optimize Your Setup – Fine-tune model parameters, token limits, and caching to improve performance.

- Explore More Customizations – Modify the UI, authentication options, or API integrations to fit your workflow.

- Monitor Costs & Usage – Set up Azure cost alerts and API usage tracking to avoid unexpected charges.

- Experiment with Alternative Frontends – Test Open WebUI or FlowiseAI if you need additional features.

- Deploy to the Cloud – Instead of running LibreChat locally, deploy it as a containerized application using Azure Container Instances (ACI) for a serverless, scalable solution that eliminates the need for local infrastructure.

💡 Looking for more automation in your AI workflows? Consider integrating LibreChat into DevOps pipelines, internal tools, or business applications for a seamless AI-powered experience.

Have you set up LibreChat with Azure OpenAI? What challenges or creative customizations did you implement? I'd love to hear your experiences—drop a comment below or share your insights!