Deploying Azure OpenAI via Bicep: Key Considerations & Lab Setup

Introduction

Azure OpenAI enables organizations to leverage powerful AI models such as GPT-4o, o3-mini, and Whisper for a variety of use cases, from chatbots to code generation and beyond. However, deploying Azure OpenAI via Infrastructure as Code (IaC) using Bicep requires careful planning to ensure a scalable, cost-effective, and secure deployment.

In this article, we’ll set up a simple lab environment to explore the key concepts behind deploying Azure OpenAI with Bicep. This will help us gain a deeper understanding of the service’s deployment types, regional availability, quota limitations, and model options. By the end, we’ll have a working Azure OpenAI deployment with a few models to experiment with helping us plan more advanced deployments in the future.

What We’ll Cover

Before diving into deployment, we’ll first explore some key areas that are important to understand when setting up Azure OpenAI:

- Azure OpenAI Deployment Types – Understanding different deployment models.

- Regional Availability – Where Azure OpenAI is available and why it matters.

- Azure OpenAI Models – Overview of supported models and their capabilities.

- Quota Management – Understanding capacity limits and how to request increases.

Once we’ve covered these fundamentals, we’ll walk through a step-by-step Bicep deployment, explaining each component and providing working code that you can use to deploy Azure OpenAI in your own environment.

Azure OpenAI Deployment Types

Azure OpenAI provides flexibility in how and where your AI models are deployed. There are two main deployment types: Standard and Provisioned. Within these, there are different data processing location options that impact latency, quota limits, and regional availability.

Standard Deployments

Standard deployments are the default option, making them ideal for setting up a lab environment. However, for production deployments, careful consideration is required to ensure alignment with business requirements, including privacy, data sovereignty, availability, latency, scalability, and cost considerations such as billing and pricing.

Standard deployments are further divided into:

-

Global Standard – The recommended starting point, this deployment type routes traffic dynamically across Azure’s global infrastructure, ensuring high availability and quick access to new models.

-

DataZone Standard – Routes traffic within a Microsoft-defined data zone, ensuring processing happens within a specific region or country grouping.

-

Azure Geography Standard – Ensures that all processing remains within the specific Azure geography where the resource is created.

Provisioned Deployments

Provisioned deployments offer dedicated capacity and are ideal for workloads requiring low latency and predictable performance. These are further divided into:

-

Global Provisioned-Managed – Offers dedicated capacity while still leveraging Azure’s global infrastructure.

-

Azure Geography Provisioned-Managed – Ensures dedicated capacity within a specific Azure geography.

Choosing the Right Deployment Type

| Deployment Type | Best For | Key Benefits | Considerations |

|---|---|---|---|

| Global Standard | General workloads | - Fast access to models - Easy setup - Cheap, pay as you go for token usage - Great for lab enviornment | - Higher latency variations at scale - Data soverignty and privacy requirements |

| DataZone Standard | Regional processing compliance | - Regional traffic routing - Traffic stays in regional boundaries | - Limited to Microsoft defined data zones |

| Azure Geography Standard | Regulatory compliance | - Processing within a specific geography | - Lower scalability - Could impact availability and performance - Less of a pool of compute to choose from |

| Global Provisioned-Managed | Large-scale AI workloads | - Dedicated resources - Lower latency - Great for production workloads | - Much higher cost - Provisioning time |

| Azure Geography Provisioned-Managed | Strict regulatory environments | - Guaranteed regional processing - Might meet a customers requirements around data soverignty and privacy - Great for production workloads where availability and performance needs to be guaranteed | - Most expensive option |

Understanding these deployment types helps ensure that your AI workloads meet performance, compliance, and cost requirements.

ℹ️ More information can be found in the 🔗 Azure OpenAI deployment types section of the Microsoft Learn documentation.

Supported Azure OpenAI Regions

Azure OpenAI is currently available in select regions. However, while the service itself may be available, the availability of specific models varies significantly by region. Before deploying, ensure your chosen region supports the required models.

ℹ️ You can check the latest supported regions and model availability on Microsoft’s official documentation - 🔗 Global Standard Model Availability.

Commonly Supported Regions

- East US

- South Central US

- West Europe

- France Central

- Sweden Central

- UK South

- Japan East

- Australia East

New Model Preview Regions

Newer model previews are often tested in specific regions before wider availability. If you're setting up a lab or testing environment, consider deploying in these regions for early access:

- East US 2

- Sweden Central

- North America

Impact of Choosing Different Regions

By understanding regional availability, you can ensure that your Azure OpenAI deployment meets both performance and compliance needs.

| Factor | Impact |

|---|---|

| Latency | Choosing a region closer to your users improves response times. |

| Quota Limits | Some regions may have lower quotas or require quota increase requests. |

| Compliance | Certain regions comply with specific regulatory requirements (e.g., GDPR for EU regions). |

| Model Availability | Some models might be available first in global regions like East US before rolling out elsewhere. |

ℹ️ Stay updated on Microsoft’s documentation as new regions are added frequently.

Quota Considerations

Azure OpenAI imposes quota limits on resource usage, which can affect scalability and availability. Understanding these limits and planning accordingly is crucial for ensuring smooth deployment and operation.

Types of Quotas

| Quota Type | Description |

|---|---|

| Request Rate Limits | Limits on the number of requests per minute/hour based on the selected model and region. |

| Token Limits | Restrictions on the number of tokens processed per request and per day. |

| Model-Specific Limits | Certain models may have stricter usage limits compared to others. |

| Regional Quotas | Availability and quotas can differ across regions, requiring careful selection. |

For a detailed breakdown of quota limits per model and region, refer to 🔗 Azure OpenAI Service quotas and limits.

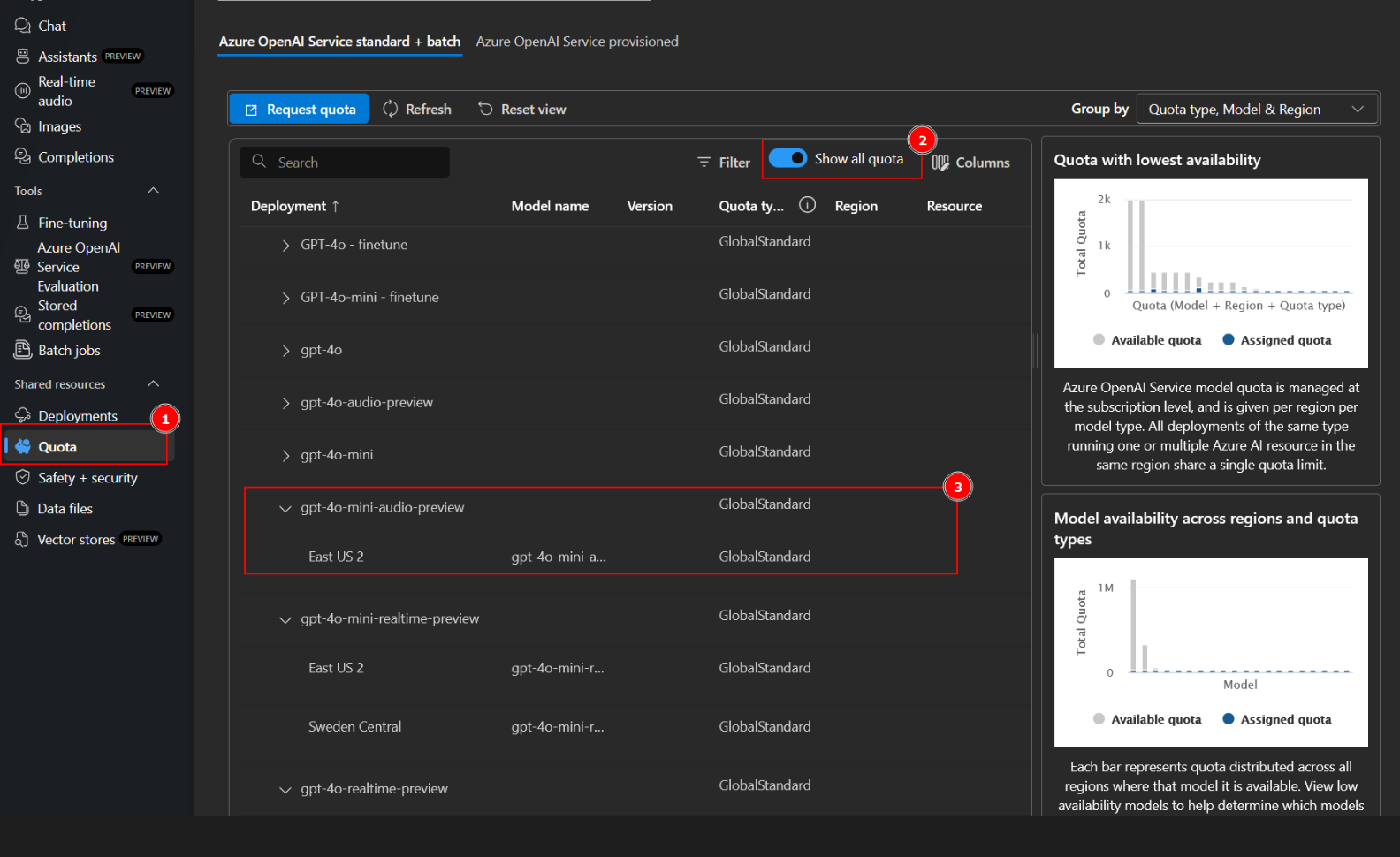

ℹ️ - You can view your quota via the portal via Azure Foundary.

|

|---|

| Viewing Quota's via the Portal |

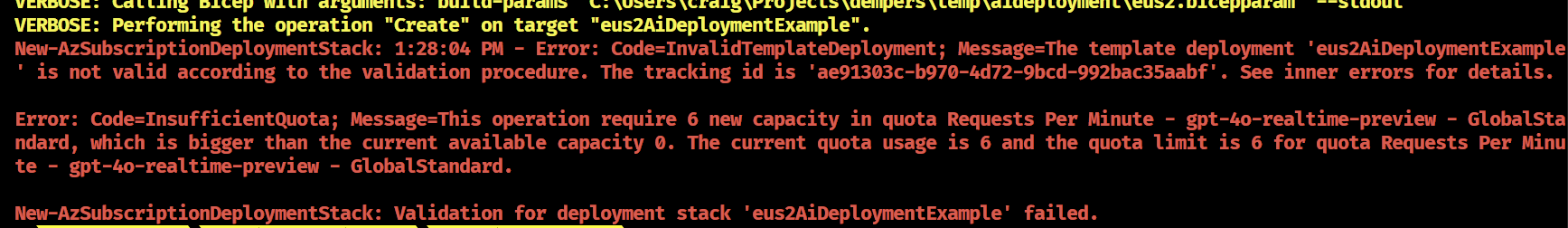

Failure to check your available quote may result in an error like the below 😅.

|

|---|

| Quota Error |

Overview of Key Models

Below is a breakdown of the key AI models and their deployments across different Azure regions, along with their strengths and best use cases. As I am in Australia, I would like to target the Australia region where I can but then fall back to east us or sweden where needed to be able to preview new models for testing etc. Here is a list of what I have deployed for testing purposes in my lab.

| Model | Region | Strengths | Best Use Cases |

|---|---|---|---|

| GPT-4o | Australia East, East US 2 | - High performance - balanced cost | - Chatbots -Content creation - Coding assistance |

| o3-mini | Request access via waiting list | - Enhanced reasoning abilities - Cost effictive - Lightweight | - Complex problem solving in science, math and coding - |

| O1 Mini | East US 2 | - Lightweight model with good performance - advanced reasoning | Small-scale AI workloads, cost-sensitive applications |

| GPT-4o Mini Audio Preview | East US 2 | - Low-latency speech input and output for interactive conversations | - AI-powered transcription - voice assistants - Most suited for pre-recorded audio processing |

| GPT-4o Realtime Preview | East US 2 | - Low-latency speech input and output for real-time interactive conversations | - Fast real-time AI responses - voice assistants |

| GPT-4o Mini Realtime Preview | East US 2 | - Cost Effective - Low-latency speech input and output for real-time interactive conversations | - Fast real-time AI responses - voice assistants |

| DALL-E 3 | Australia East | - Image generation | - AI-powered design - Creative applications |

| Text-Embedding-3 Large | East US 2 | - Optimized for embeddings | - Semantic search - NLP tasks |

| Whisper | East US 2 | - Speech-to-text | - Transcription - accessibility solutions |

| TTS (Text-to-Speech) | Sweden Central | - High-quality text-to-speech conversion | - AI-generated voice applications |

| TTS-HD | Sweden Central | - High-definition text-to-speech | - High-quality voice generation applications - offline |

This list showcases how different models are deployed across various Azure regions to take advantage of regional availability and optimized capabilities.

ℹ️ Docuementation at Microsoft Learn has a comprehensive list of 🔗 Azure OpenAI Service Models.

Choosing the Right Model

When selecting a model, it's important to balance performance, cost, and latency. Here are key considerations:

- Performance vs. Cost – GPT-4o offers high performance but at a higher cost, while o3-mini is more budget-friendly.

- Latency Requirements – If real-time interaction is crucial, GPT-4o Realtime models are the best choice.

- Scalability – For high-volume applications, consider quota limits and regional availability.

Deploying an Azure OpenAI model

To ensure a structured approach, we will break the deployment down into logical steps.

Deployment Architecture Overview

Before jumping into the Bicep code, lets visualize our deployment with a simple mermaid diagram.

Bicep Deployment File

To begin, we first create the Bicep deployment file. This serves as the foundation for defining our Azure infrastructure in a repeatable and automated way.

-

The deployment is scoped at the subscription level, which allows us to create a resource group first, followed by all necessary Azure components within it.

-

This approach ensures that we have a repeatable pattern that can be extended for future deployments.

-

The deployment leverages Azure Verified Modules (AVM), which simplifies our work by using pre-built, tested modules instead of manually writing infrastructure definitions from scratch.

-

This approach enables consistency, security, and scalability without having to reinvent the wheel.

-

The deployment file is referenced by Bicep parameter files, with each parameter file representing a specific region and the models that will be deployed in that region.

ℹ️ - The networkAcls section in the Azure OpenAI deployment can be customized to restrict access to an allowed list of IP addresses for enhanced security.

targetScope = 'subscription'

@description('Optional. Location for all resources.')

param location string = deployment().location

@description('Required. Name of the resource group to create.')

param resourceGroupName string

@description('Required. Tags for the resources.')

param tags object

@description('Required. Name of the Azure Cognitive Services account.')

param azureAIServiceConfig object

module resourceGroup 'br/public:avm/res/resources/resource-group:0.4.0' = {

name: '${uniqueString(deployment().name, location)}-resourceGroup'

params: {

location: location

name: resourceGroupName

tags: tags

}

}

module aiService 'br/public:avm/res/cognitive-services/account:0.9.2' = {

name: 'aiService-${azureAIServiceConfig.name}'

scope: az.resourceGroup(resourceGroupName)

params: {

kind: azureAIServiceConfig.kind

name: azureAIServiceConfig.name

sku: azureAIServiceConfig.sku.name

disableLocalAuth: azureAIServiceConfig.disableLocalAuth

deployments: [for deployment in azureAIServiceConfig.deployments : {

model: deployment.model

name: deployment.name

sku: deployment.sku

versionUpgradeOption: deployment.versionUpgradeOption

raiPolicyName: deployment.raiPolicyName

}]

location: location

publicNetworkAccess: azureAIServiceConfig.publicNetworkAccess

networkAcls: azureAIServiceConfig.networkAcls

}

dependsOn: [

resourceGroup

]

}

Regional Deployments

Australia East Parameter File Example

The following Bicep parameter file defines the Australia East deployment. This region is preferable for users based in Australia as it provides low latency and optimal performance.

using 'main.bicep'

param resourceGroupName = 'rg-test-ae-ai-01'

param location = 'australiaeast'

param tags = {

owner: 'A User'

}

param azureAIServiceConfig = {

name: 'ais-test-ae-ai-01'

sku: {

name: 'S0'

}

kind: 'AIServices'

location: location

disableLocalAuth: false

publicNetworkAccess: 'Enabled'

networkAcls: {

defaultAction: 'Deny'

ipRules: [

{

value: '<your allowed IP addresses>'

}

]

}

deployments: [

{

model: {

format: 'OpenAI'

name: 'gpt-4o'

version: '2024-05-13'

}

name: 'gpt-4o'

sku: {

capacity: 34

name: 'GlobalStandard'

}

versionUpgradeOption: 'OnceNewDefaultVersionAvailable'

raiPolicyName: 'Microsoft.DefaultV2'

}

{

model: {

format: 'OpenAI'

name: 'dall-e-3'

version: '3.0'

}

name: 'dall-e-3'

sku: {

name: 'Standard'

capacity: 1

}

versionUpgradeOption: 'OnceNewDefaultVersionAvailable'

raiPolicyName: 'Microsoft.DefaultV2'

}

]

}

Deploying Australia East Models

ℹ️ - Here is an example of how we would use Azure Deployment Stack PowerShell command to deploy the models.

New-AzSubscriptionDeploymentStack `

-Name 'aeAiDeploymentExample' `

-Location 'australiaeast' `

-TemplateParameterFile 'ae.bicepparam' `

-Description 'An example Australia East Azure Open AI Deployment' `

-ActionOnUnmanage 'DeleteAll' `

-DenySettingsMode 'None' `

-Verbose

EastUs2 Deployment

Since some preview models are not available in Australia East, we also deploy an instance in East US 2 for testing a wider range of models.

using 'main.bicep'

param resourceGroupName = 'rg-test-eus2-ai-01'

param location = 'eastus2'

param tags = {

owner: 'A User'

}

param storageAccountConfig = {

networkAcls: {

bypass: 'AzureServices'

}

}

param keyVaultConfig = {

location: location

tags: tags

enablePurgeProtection: false

}

param azureAIServiceConfig = {

name: 'ais-test-eus2-01'

sku: {

name: 'S0'

}

kind: 'AIServices'

location: location

disableLocalAuth: false

publicNetworkAccess: 'Enabled'

networkAcls: {

defaultAction: 'Deny'

ipRules: [

{

value: '<your allowed IP Addresses>'

}

]

}

deployments: [

{

model: {

format: 'OpenAI'

name: 'gpt-4o'

version: '2024-11-20'

}

name: 'gpt-4o'

sku: {

capacity: 450

name: 'GlobalStandard'

}

versionUpgradeOption: 'OnceNewDefaultVersionAvailable'

raiPolicyName: 'Microsoft.DefaultV2'

}

{

model: {

format: 'OpenAI'

name: 'gpt-4o-mini-audio-preview'

version: '2024-12-17'

}

name: 'gpt-4o-mini-audio-preview'

sku: {

capacity: 30

name: 'GlobalStandard'

}

versionUpgradeOption: 'OnceNewDefaultVersionAvailable'

raiPolicyName: 'Microsoft.DefaultV2'

}

{

model: {

format: 'OpenAI'

name: 'gpt-4o-realtime-preview'

version: '2024-12-17'

}

name: 'gpt-4o-realtime-preview'

sku: {

capacity: 6

name: 'GlobalStandard'

}

versionUpgradeOption: 'OnceNewDefaultVersionAvailable'

raiPolicyName: 'Microsoft.DefaultV2'

}

{

model: {

format: 'OpenAI'

name: 'gpt-4o-mini-realtime-preview'

version: '2024-12-17'

}

name: 'gpt-4o-mini-realtime-preview'

sku: {

capacity: 6

name: 'GlobalStandard'

}

versionUpgradeOption: 'OnceNewDefaultVersionAvailable'

raiPolicyName: 'Microsoft.DefaultV2'

}

{

model: {

format: 'OpenAI'

name: 'o1-mini'

version: '2024-09-12'

}

name: 'o1-mini'

sku: {

capacity: 5

name: 'GlobalStandard'

}

versionUpgradeOption: 'OnceNewDefaultVersionAvailable'

raiPolicyName: 'Microsoft.DefaultV2'

}

{

model: {

format: 'OpenAI'

name: 'text-embedding-3-large'

version: '1'

}

name: 'text-embedding-3-large'

sku: {

capacity: 350

name: 'Standard'

}

versionUpgradeOption: 'OnceNewDefaultVersionAvailable'

raiPolicyName: 'Microsoft.DefaultV2'

}

{

model: {

format: 'OpenAI'

name: 'whisper'

version: '001'

}

name: 'whisper'

sku: {

capacity: 3

name: 'Standard'

}

versionUpgradeOption: 'OnceNewDefaultVersionAvailable'

raiPolicyName: 'Microsoft.DefaultV2'

}

]

}

Deploying East US 2 Models

ℹ️ - Here is an example of how we would use Azure Deployment Stack PowerShell command to deploy the models.

New-AzSubscriptionDeploymentStack `

-Name 'eus2AiDeploymentExample' `

-Location 'eastus2' `

-TemplateParameterFile 'eus2.bicepparam' `

-Description 'An example East US 2 Azure Open AI Deployment' `

-ActionOnUnmanage 'DeleteAll' `

-DenySettingsMode 'None' `

-Verbose

Sweden Central Parameter File Example

The Sweden Central region is used for deploying Text-to-Speech (TTS) and TTS-HD models, as they are only available in select regions.

using 'main.bicep'

param resourceGroupName = 'rg-test-swec-ai-01'

param location = 'swedencentral'

param tags = {

owner: 'A User'

}

param storageAccountConfig = {

networkAcls: {

bypass: 'AzureServices'

}

}

param keyVaultConfig = {

location: location.long

tags: tags

enablePurgeProtection: false

}

param azureAIServiceConfig = {

name: 'ais-test-swec-ai-01'

sku: {

name: 'S0'

}

kind: 'AIServices'

location: location

disableLocalAuth: false

publicNetworkAccess: 'Enabled'

networkAcls: {

defaultAction: 'Deny'

ipRules: [

{

value: '<your allowed IP Addresses>'

}

]

}

deployments: [

{

model: {

format: 'OpenAI'

name: 'tts'

version: '001'

}

name: 'tts'

sku: {

name: 'Standard'

capacity: 3

}

versionUpgradeOption: 'OnceNewDefaultVersionAvailable'

raiPolicyName: 'Microsoft.Default'

}

{

model: {

format: 'OpenAI'

name: 'tts-hd'

version: '001'

}

name: 'tts-hd'

sku: {

name: 'Standard'

capacity: 3

}

versionUpgradeOption: 'OnceNewDefaultVersionAvailable'

raiPolicyName: 'Microsoft.Default'

}

]

}

Deploying Sweden Central Models

ℹ️ - Here is an example of how we would use Azure Deployment Stack PowerShell command to deploy the models.

New-AzSubscriptionDeploymentStack `

-Name 'swecAiDeploymentExample' `

-Location 'swedencentral' `

-TemplateParameterFile 'swec.bicepparam' `

-Description 'An example Sweden Central Azure Open AI Deployment' `

-ActionOnUnmanage 'DeleteAll' `

-DenySettingsMode 'None' `

-Verbose

Final Thoughts

Deploying Azure OpenAI via Bicep provides a structured, repeatable, and scalable way to manage AI workloads in the cloud. By breaking the deployment into logical steps, we ensure that infrastructure is modular, secure, and aligned with best practices.

The deployments demonstrated above are primarily for lab environments and learning purposes. Transitioning this into a production-ready deployment requires deeper considerations, including security, compliance, and operational resilience.

Key Takeaways

- Infrastructure as Code (IaC) – Using Bicep ensures that deployments are automated, version-controlled, and repeatable.

- Regional Considerations – Selecting the right Azure region is critical for latency, compliance, and model availability.

- Scalability & Security – Leveraging Azure Verified Modules (AVM) simplifies infrastructure while ensuring security and performance.

- Flexible Model Deployments – Deploying in multiple regions provides access to the latest models while optimizing for cost and availability.

- Deployment Stacks for Cleanup – Using deployment stacks allows for simplified management and clean deprovisioning of resources.

Next Steps

While this guide focused on a lab setup, moving to a production-ready deployment involves additional planning and refinement. Here are some key areas to consider:

- Monitoring & Logging – Integrate Azure Monitor and Log Analytics to track usage, performance, and potential issues.

- Cost Optimization – Evaluate quota limits, reserved capacity options, and pricing tiers to optimize costs for long-term usage.

- Security & Compliance – Ensure data sovereignty, private networking, access controls, and role-based access management (RBAC) align with organizational policies.

With this structured approach, you can confidently deploy and manage Azure OpenAI, ensuring scalability, security, and cost efficiency while preparing for real-world applications.